Abstract

We quantified gender representation on editorial boards of 435 mathematical sciences journals. Using a combination of automated tools and crowdsourcing, we determined that 8.9% of the of the 13,067 math editorships in our sample are held by women, in comparison to 16% amongst faculty in mathematical science departments that grant doctoral degrees. Our analysis also detected subgroups (e.g., journals, publishing houses, and countries) that are statistical outliers, having significantly higher or lower representation of women than the background level.

Introduction

We work in mathematics and computer science: two fields where men far outnumber women. This is a source of ongoing concern to us. We want our fields to advance human knowledge as much as possible. This means we want all of the best people involved, and this assuredly includes women. Unfortunately, girls and women face many barriers to participating in mathematics and other STEM fields, ranging from stereotypes conveyed in early childhood to workplace policies unfavorable to women to implicit and explicit bias and much more.

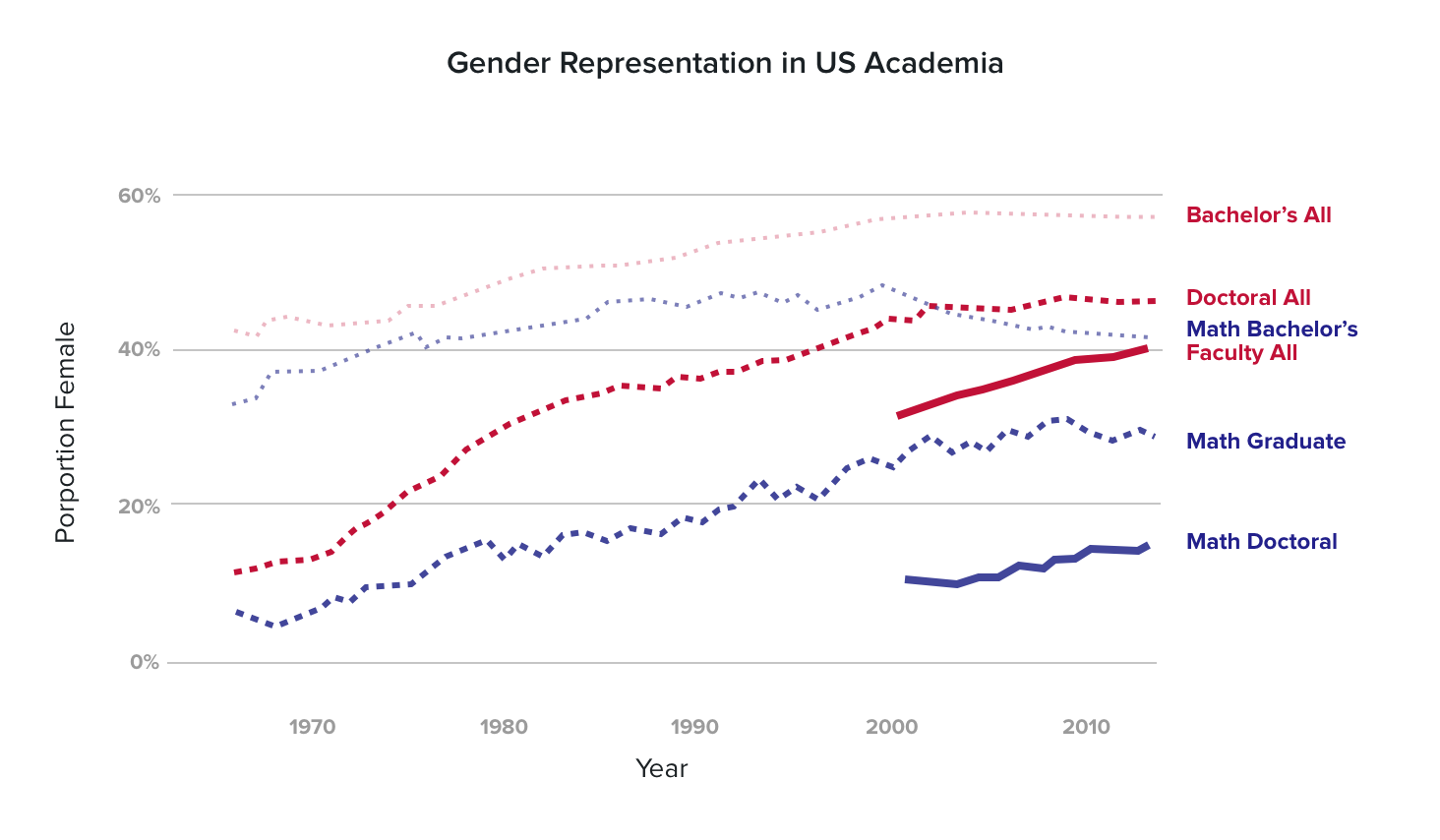

The so-called leaky pipeline in the mathematical sciences (in blue) as compared to all academic fields combined (in red).

Women constitute 58% of bachelor’s degree recipients, but only 41% within the mathematical sciences. Women account for 31% of new Ph.D.s in the mathematical sciences, and merely 16% of faculty in mathematical sciences departments at doctoral granting institutions. The phenomenon of decreasing representation at subsequent career stages is often referred to as the leaky pipeline.

One day in 2015, in an online discussion, colleagues of ours noted some mathematics journals with no women editors, and asked if any gender statistics were available for journal editors. We realized that none were, and this struck us as important -- but missing -- information. Journal editors are arguably the leaders of an academic field. They are the gatekeepers of publishing, controlling to some extent a field’s intellectual discourse, and certainly controlling the publishing fates of many individual scholars. Journal editorships also convey prestige, play a positive role in professor's’ tenure decisions, and can offer important opportunities for networking. Finally, there is so-called role model effect, meaning that the demographics of a field’s leadership can influence who is retained in the field.

Once we realized that no one had quantified gender representation on mathematics journal editorial boards, we decided to do it ourselves, in order to asses whether the leaky pipeline really did leak up through the highest echelon of the field, and to provide a benchmark against which we could measure any changes in gender representation over time.

Here is every math journal editor visualized neatly in an interactive bubble graph. Click a bubble to show a publisher and see each individual editor.

Figure 3. Interactive chart of all math journal editors and genders

Methods

In order to explain our methodology, it’s helpful first to understand a little bit of background related to crowdsourcing and gender.

Overview of methods

Crowdsourcing

Much of our proposed research hinges on using Amazon Mechanical Turk (MTurk, for short), a crowdsourcing platform for Human Intelligence Tasks (HITs) online, particularly tasks that can't be easily automated by a computer.

Our study used MTurk because information about journal editorial boards is stored all over the internet in a variety of formats, including XML, HTML, PDF documents, plain text, spreadsheets, and more. The only way to get all the information is to look it up, journal by journal, and type all of the information into a consistent format. This sort of task is ideally suited to MTurk.

How does MTurk work?

The MTurk ecosystem consists of requesters and workers. Requesters are the people who post HITs that they would like completed. Along with the description of the HIT, the requester posts a fee that the worker will earn. Workers see lists of HITs online and can choose which ones to complete. When the work is complete, the requester can approve or reject the work. If the work is approved, the worker gets paid.

You might be wondering if requesters can abuse the system by rejecting legitimate work, hence denying the worker of their rightful wage. In theory, yes, this can happen. However, there is a rich online social community of workers who share information. Requesters who act in bad faith will very quickly earn a bad reputation and be effectively banned. Workers will no longer choose to complete their HITs, making the MTurk platform useless for these requesters.

Similarly, you might be wondering if workers can abuse the system by submitting work that is bogus. In theory, yes, they can. However, the requester always retains the right to reject the work. Furthermore, the requester can set conditions that workers must meet to even undertake the HIT. For this project, we only allow workers who have completed at least 1000 HITs (so they are experienced with the platform) and who have at least a 99% approval rating on past work (so they have a demonstrated track record). To further ensure valid data, we required that each of our HITs be completed by multiple workers.

Gender

We also used crowdsourcing to infer gender for part of our database of editorships.

We asked MTurk workers to guess the gender associated with a given editor based on the editor's name, and other information found on the internet, including text and images related to the editor. Workers were asked to indicate their degree of certainty in the gender they guess.

In keeping with best practices and modern understanding, we allowed for the workers to assign an "alternate identification" if they felt the male/female binary choice did not apply. Most importantly, we recognize that an individual's gender may not fit neatly into traditionally used categories, and that gender is most appropriately expressed and explained by that individual. Still, we must have gender data in order to begin quantifying the representation of women, and so we proceeded, cognizant of the limitations of our study.

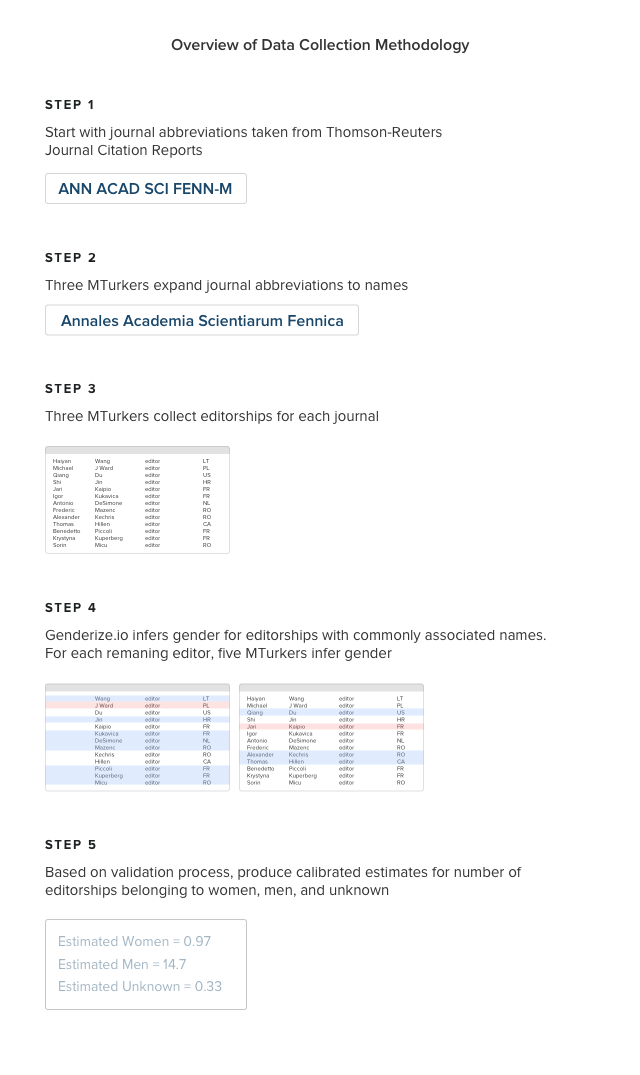

We began our project with all 605 mathematical sciences journals that are indexed by Thomson-Reuters Journal Citation Reports (JCR). We could only obtain the abbreviated journal titles from JCR, so we gave these abbreviations to MTurk workers and asked them to provide us with the full journal titles and a editorial board listing URL. Each journal was looked up by three workers.

For 406 journals, all workers reported the same full journal title. For the remaining 199 journals, we determined the full title ourselves. For 183 journals, all workers reported the same URL. For the remaining 422 URLs, we looked it up manually. Two of the 605 journals appeared to be defunct so we removed them from our study.

Now with a list of 603 journal titles and editorial board URLs, we asked MTurk workers to go to each editorial board listing and provide the following information for each board member:

- first, middle, and last name

- title on editorial board

- institutional affiliation

- city

- state/province

- postal code

- country

Workers could leave blank information that was not available. Each editorial board was initially recorded by three workers. For 229 journals, at least workers reported the same number of editorial board members to within 10%, and we took these as valid, saving the intersection of board members. For the other 374 journals, we asked an additional MTurk worker to take a stab at recording the editorial board, and used the same 10% criterion for acceptance. In the end, we had good agreement for 435 journals, comprising a database of 13067 editorships.

Finally, we had to infer the gender of each editorial board member. First, we used the genderize.io database, which at the time we used it, contained 216,286 unique first names spanning 79 countries and 89 languages. Each first name carries with it the number of times it was known to be associated with a man and known to be associated with a woman in the underlying data set. For instance, querying the name “Alex,” returned a count of 5,856 instances, of which 87% were associated with men. We used the database to look up each first name in our list of editorships and we accepted the gender prediction if the most likely gender had a score of at least 85%. In the end, we used genderize.io predictions for about half of our editorships.

For the other half, we inferred the gender using Amazon MTurk, as described previously. For each editorship, we used the gender inference and confidence rating of five workers to create an aggregate gender score ranging from -1 (assuredly a man) to +1 (assuredly a woman), with a score of 0 being the dividing point for our gender inference.

In addition to performing gender inference, we augmented and cleaned our data a bit. At the journal level, we downloaded 5-year impact factors from JCR, classified each journal as “pure,” “applied,” or “both,” and determined the publishing house from which each journal originated. At the individual editorship level, we geocoded any geographic information associated with an editor (e.g, their university or their city), and we collapsed the plethora of editorial board titles (editor, editor-in-chief, associate editor, member, etc.) into three categories: “editor,” “managing,” and “other.” You can see all of these details in the downloadable data set.

Challenges and Surprises

One of the greatest challenges in analyzing our data was dealing with potential inaccuracies or biases in our gender inference procedure. To account for this, we divided the data into different strata: names determined as male by genderize.io, names determined by female as genderize.io, and then names with MTurk gender inference scores divided into 11 bins ranging from -1 to +1. From each stratum, we sampled 30 names randomly and ourselves inferred the gender using information on the Internet. We calibrated our data based on the findings. For instance, in our data set, 783 names were determined to be female by genderize.io. We sampled 30 of these and found that 25 were women but 5 were actually men. Therefore, for the remaining 753 names in the stratum, we counted each one as 5/6 woman and 1/6 man. Though this might seem strange, it results in more accurate data; we believe our data overall has approximately 97.5% accuracy.

One of the most amazing, surprising, and inspiring parts of this project was the Amazon MTurk worker community. First of all, this community is amazingly fast and efficient. We ran over 6500 names through our MTurk gender inference procedure. Since we had each name inferred by five workers, this totals 32,500 tasks. It took merely a few hours to for the MTurk workers to complete this work. At the same time, we received many helpful, personal messages from MTurk workers. They wrote sometimes to ask for clarification, so that they could produce top-quality work for us, and other times to make suggestions for modifications to our web form that would improve the data gathering process.

Results

Overall, we found that 8.9% of editorships in our data set were held by women. This signals a degree of underrepresentation even lower than the 16% research faculty level, mentioned earlier.

By Journal

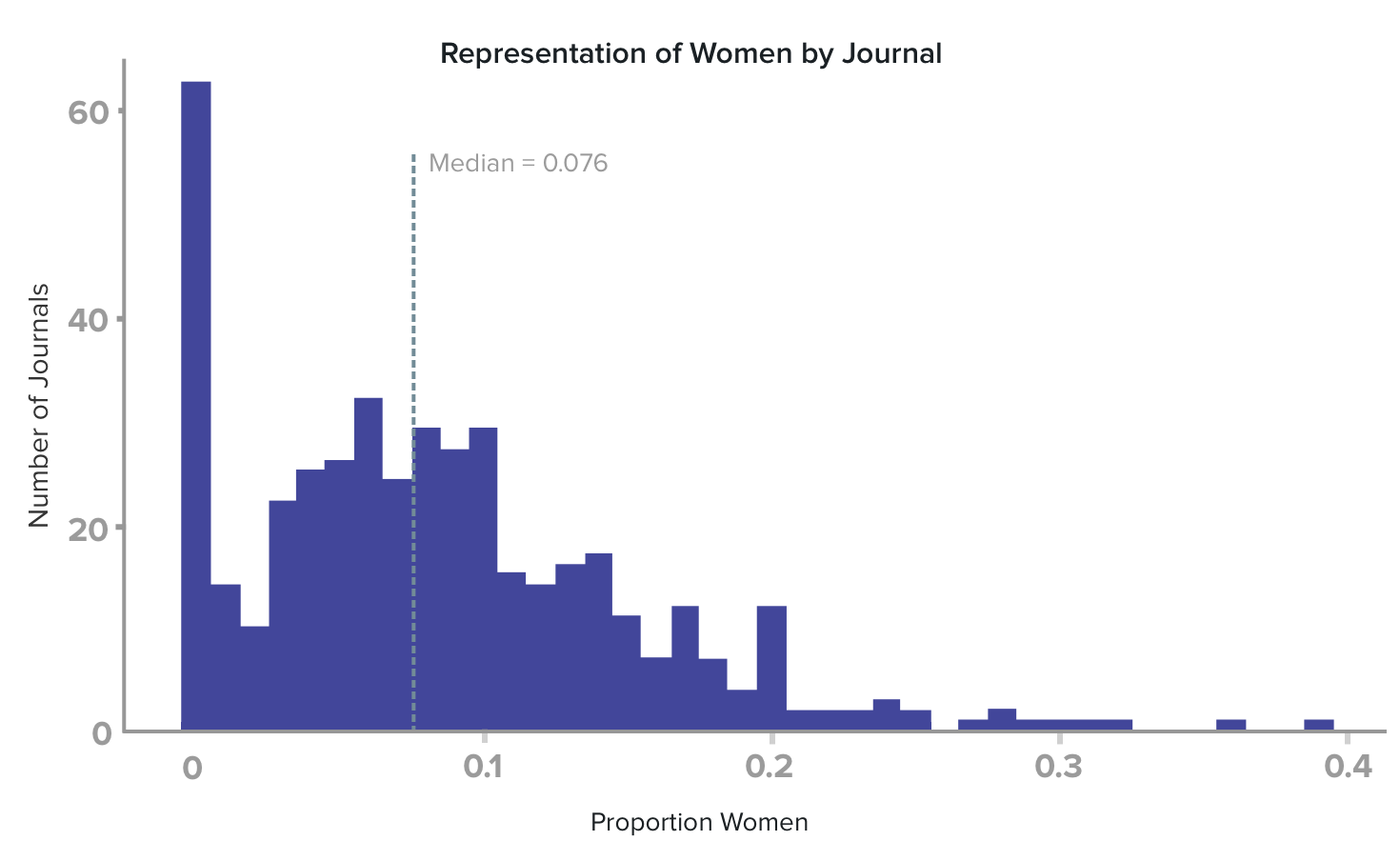

Histogram of journals by percent of editorships held by women

The median journal’s board has merely 7.6% of its editorships help by women. There are 51 of the 435 journals that have no women editors. This group includes some of the most prestigious mathematics journals, such as Annals of Mathematics, Communications on Pure and Applied Mathematics, Inventiones Mathematicae, Journal of Algebraic Geometry, Journal of Differential Geometry, and Mathematische Zeitschrift.

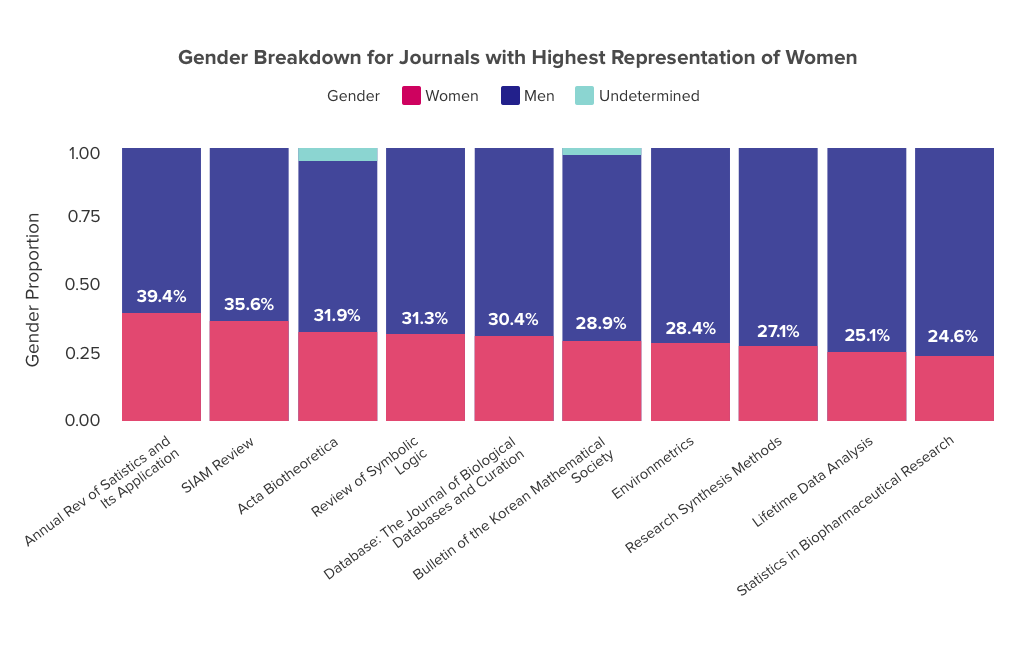

Gender breakdown for editorial boards with highest representation of women (restricted to journals with at least 10 editors)

Five journals turn out to have representation of women that is statistically significantly higher than the background level: SIAM Review, Database, Environmetrics, Research Synthesis Methods, and Lifetime Data Analysis.

By Publisher

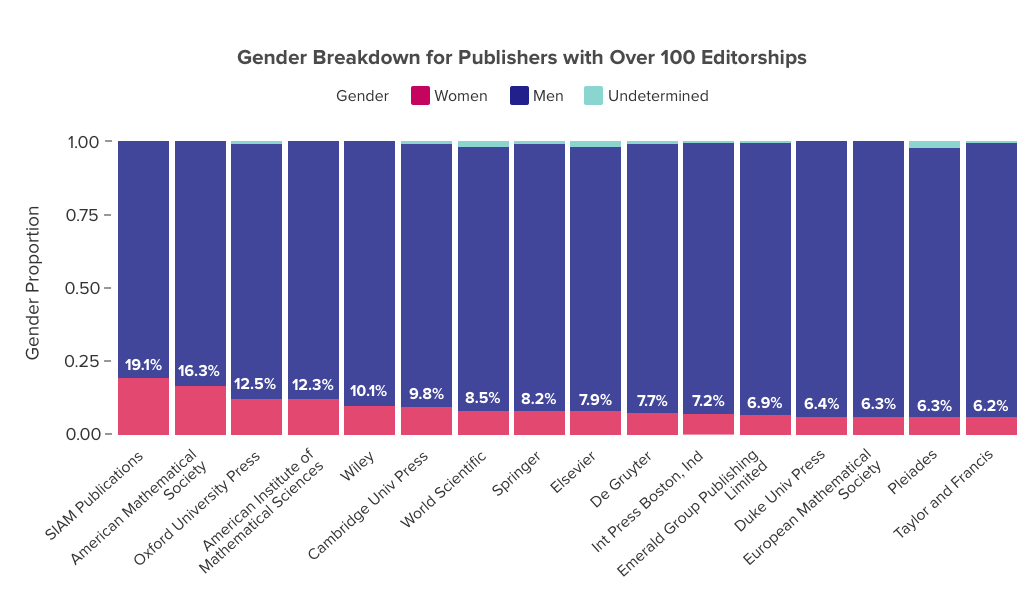

Gender breakdown for publishers with over 100 editorships

We grouped our data by publishing house of each journal, resulting in 123 groups. The median publisher has 7.3% of editorships held by women. Restricted to publisher comprising at least 100 editorships, the publishers with the highest representation of women are all professional societies and university presses: SIAM Publications, the American Mathematical Society, Oxford University Press, and the American Institute of Mathematical Sciences. Only SIAM Publications is statistically significantly higher than the background level.

By Country

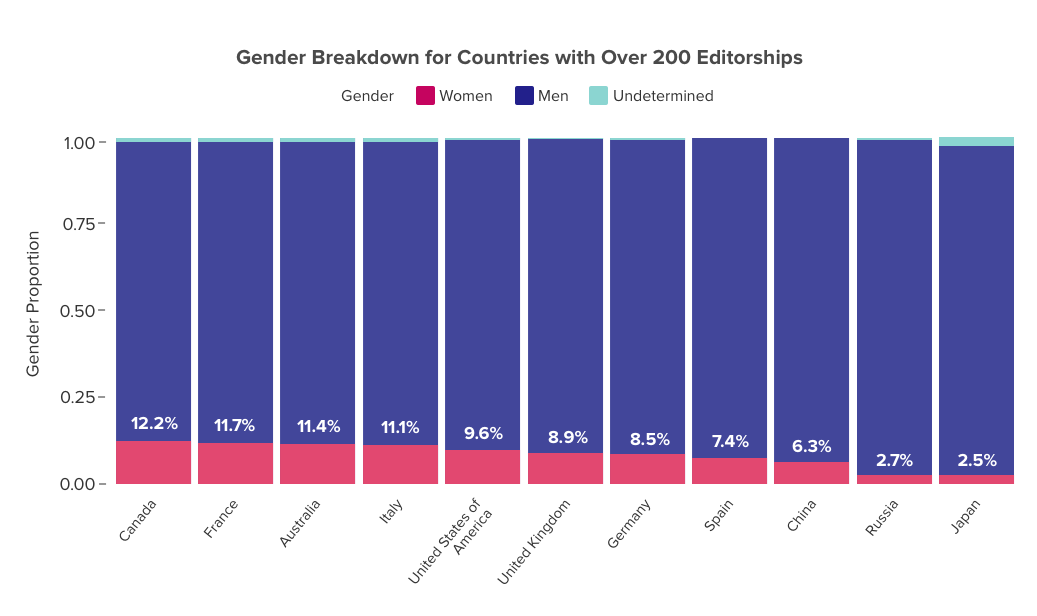

Gender breakdown for countries with over 200 editorships

We grouped our editorships by country and found that the median country has 6.3% of editorships held by women. No countries have a statistically significantly higher representation of women than the background level, but two countries -- Russian and Japan -- are lower.

By Board Title

We found no statistically significant difference in gender representation between groups having different editorial board titles when grouped as 'editor', 'managing', and 'other'. Perhaps a finer-grained treatment of these titles, as opposed to collapsing them into three groups, might shed more light.

By Impact Factor

Using a statistical test that assesses associations between variables, we found a statistically significant but still quite weak positive association between a journal’s impact factor and the proportion of its editorships held by women.

Conclusion

Before undertaking this study, some professional colleagues told us, effectively, “yeah, the representation of women on editorial boards is low, but it just reflects the low representation in the field overall.” Our data show that this is not, in fact, true

In addition to our overall (low) count of women on editorial boards, we were surprised to find groups that were statistical outliers, with either higher or lower representation of women than the background level.

Our study opens up many questions that might be addressed by the social sciences. First off, why? Why are there so few women on editorial boards? Are they not being considered as part of the potential pool? Are they being considered, but in the end, not being asked? Are they being asked but refusing the invitations? Second, once we better understand why, can we develop policies and procedures that increase gender diversity on boards?

Beyond these social science questions, we hope that our methodology, which is scalable, can be used in the future to repeat this study, which would give a sense of whether gender representation is improving over time.

Additionally, we’d like to examine other fields. For example, engineering, physics, and computer science face gender representation issue similar to mathematics. We also find ourselves asking “why stop with gender?” Diversity has many different axes. Racial and ethnic minorities tend to be drastically underrepresented in STEM fields as well. Of course, large scale studies of racial and ethnic representation pose challenges different from gender. We suspect it is much more difficult to determine race/ethnicity from a name, so we would need to develop other automated or crowdsourced methods to reliably determine a person’s background. Of course, these difficulties argue for a different data collection strategy: journals could simply request that editorial board members declare their gender, race, ethnicity, and so forth. Self-declared information would be the most reliable of all.

Many individuals and groups work hard to address issues of gender underrepresentation in mathematics and other fields. These include the Association for Women in Mathematics, the Society for Industrial and Applied Mathematics, the American Mathematical Society, the Mathematical Association of America, the Association for Women in Science, and the American Association of University Women, to name just a few. We encourage readers concerned with underrepresentation (and you all should be!) to become involved with these groups.

Chad Topaz1

Chad Topaz1 Shilad Sen1

Shilad Sen1