Project Results

We are excited to share that the work resulting from this experiment.com funding was published in the Computer Vision for Animal Behavior Tracking and Modeling Workshop at CVPR 2022, and was presented at the workshop poster session.

In the paper, we introduce methods for generating aggregate "ground truths" for fine-grained (i.e. multi-class) seal counting from the collected annotations, as well as a counting method that can utilize the aggregate information. Our method improves results by 8% over a comparable baseline, indicating the potential for algorithms to learn fine-grained counting using crowd-sourced supervision.

The paper can be found on ArXiv here, and a digital copy of the poster can be found here.

About This Project

Seals are important indicators for the health of our oceans, but it is often difficult to monitor their populations, especially in remote regions. Time-lapse cameras have made this monitoring more feasible, however the scale of data produced by these tools is prohibitively large. This project will enable automated AI-based analysis of this data via the publication of a large high-quality dataset of images collected of seal populations on remote islands near Antarctica over the last ten years.

Ask the Scientists

Join The DiscussionWhat is the context of this research?

Methods in computer vision have shown impressive results in recent years, however the range of tasks supported is still limited. Notably, much existing work deals with the detection or classification of a few objects in a scene, or counting people in a crowd. The classification of seal sex, species, and age in imagery is extremely challenging, both for scientists and automated methods, and exposes several open research challenges in computer vision. Many indications of sex or species are highly contextual, and seals are often tightly clustered into groups or may blend in with background environments. This project aims to develop a high-quality dataset and benchmark to encourage the computer vision research community to focus on these high-impact challenges.

What is the significance of this project?

Seals are sentinels of changes within their ecosystem. Because they spend the majority of their life in water and are at the top of the food chain, variation in the size or structure of their populations may be representative of larger changes to the dynamic ocean ecosystems. Measuring these changes year-round can help to better understand how threats to the ecosystem disrupt the dynamics of resident wildlife. Automated analysis would enable this research to take place on a much larger scale than is currently possible.

Within computer vision, datasets and benchmarks are crucial for pushing the field forward. This project would help to align computer vision research with the study of the natural world and encourage the development of state-of-the-art algorithms which benefit the oceans.

What are the goals of the project?

For this project we will evaluate the efficacy of using AI and computer vision to automate the analysis and classification of seals. To do this, we will first expand upon the work of Seal Watch, a citizen science project which collected nearly 200,000 annotations of seals in imagery from South Georgia and the South Sandwich Islands, to create a high-quality computer vision dataset. We will then fine-tune and benchmark existing AI algorithms for counting and classifying seals, and publish the dataset and results in order to further engage the computer vision research community on this challenge. The requested funds will support annotation labor, cloud computing and storage costs, and researcher labor. All results, data, and models will be open sourced to the public.

Budget

Expert Annotations: In order to accurately evaluate the performance of automated algorithms, we will collect a small but high-quality expert-annotated test set of images in addition to the existing citizen science annotations.

Computing Costs: We will benchmark existing computer vision algorithms, which require training machine learning models on high-end GPU computers in the cloud. These services are billed by the hour. These costs will support this training as well as storage and hosting costs for the dataset, which will be released for public download.

Researcher Labor: This will help fund the computer vision research effort, including dataset curation, code development, and experiment execution and analysis.

New algorithm development: Funding for this stretch goal will allow us to research new ways to improve AI algorithm performance. By improving upon existing algorithms we can allow for more detailed and accurate analysis of population dynamics and behavior.

Endorsed by

Project Timeline

Project Timeline

Upon funding, we will begin to distill the 200,000 citizen science annotations we have collected into an accurate and high quality dataset in a format that can be easily used by other researchers. We will also collect a test set of annotations from expert ecologists, which will be the gold standard to evaluate algorithms on. We will develop (and open source) our own algorithms/models, and publish the dataset through the web and hopefully a through a journal or conference.

Mar 09, 2022

Project Launched

Apr 30, 2022

Finalize annotation collection - organize existing Zooniverse annotations and create new expert annotations.

May 13, 2022

Generate machine learning compatible labels and create robust training and testing sets.

Jun 17, 2022

Develop a suite of benchmark computer vision / machine learning algorithms. Train these models on the dataset and evaluate results.

Jul 15, 2022

Compile a results report / publication. Open source all code, data, and models and disseminate to the public.

Meet the Team

Justin Kay

My background is in computer science and machine learning, and I am excited about the ways that these fields can support ecological science and conservation. In 2019 I co-founded Ai.Fish LLC, an artificial intelligence company focused on computer vision applications in marine conservation and sustainability, where I currently serve as the CTO. In this role I have led computer vision projects in the marine space in collaboration with several incredible NGOs including the National Fish and Wildlife Foundation and the Environmental Defense Fund. I have additionally collaborated with The Nature Conservancy on their open source fisheries dataset fishnet.ai, and presented that dataset to the computer vision research community through a workshop publication at last year's CVPR conference. I also work part-time as a researcher in the Computational Vision Lab at Caltech, where we are developing computer vision methods for monitoring migrating salmon.

These experiences have taught me that (i) emerging AI technologies can have a significant positive impact toward scaling ecological monitoring projects around the globe, and (ii) that impactful research in AI is often driven by high-quality benchmarks and datasets that encourage other researchers to help push the state of the art. I am very excited for the opportunity to collaborate with Seal Watch to help develop methods to understand seals and encourage other computer vision researchers to do the same.

Catherine Foley

I am a quantitative ecologist with interests focused on applied spatial and conservation ecology. In particular, much of my research is concerned with developing the use of emerging technologies for marine conservation and management in a variety of ecosystems. I maintain active collaborations in the fields of Antarctic and tropical marine ecology, in which I completed my Ph.D. and postdoctoral work.

I am the lead researcher of Seal Watch. Through this project I have collected 10 years of camera trap data of seal populations in South Georgia and the South Sandwich Islands. Through the Zooniverse platform we have collected nearly 200,000 annotations on this data from passionate citizen scientists around the world. This experiment.com project will help curate this data into a high-quality dataset which will enable further study of these fascinating and ecologically crucial species.

Lab Notes

Nothing posted yet.

Additional Information

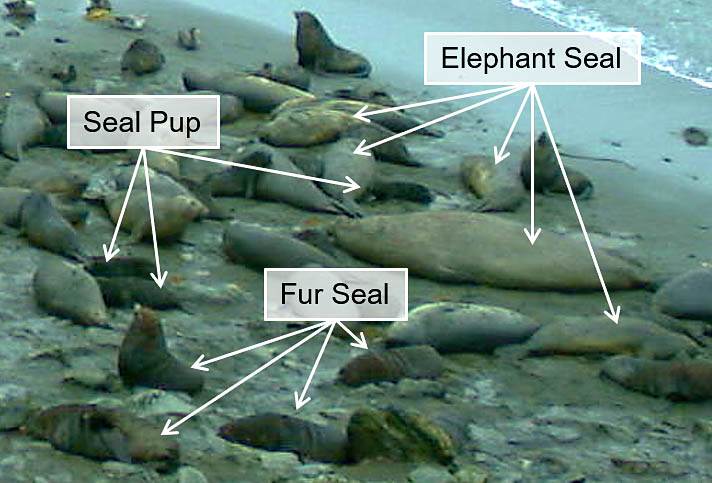

Images in the dataset may contain a combination of Southern elephant seals and Antarctic fur seals. Distinguishing between the two species is very important for scientists to be able to monitor the populations of each type of seal. Additionally, identifying males, females, and pups can be very challenging, however getting good estimates of the number of each group is very important for understanding how their populations are changing. Automated computer vision algorithms will need to perform all of these classifications (species, sex, and age).

Elephant Seals

Male elephant seals are perhaps the easiest to identify due to their enormous size. These seals will be 2-4 times larger than other seals. Female elephant seals will be substantially smaller than males, but slightly larger than male fur seals with a rotund, blubbery body. Elephant seal pups will be substantially smaller than females. When they are first born they will have thick black fur which will molt shortly before they leave for the sea. Elephant seal pups will always be located close to a female elephant seal.

Fur Seals

Male fur seals are substantially larger than females and generally have dark brown fur. Female fur seals are smaller and their fur can be more variable in color, generally with a tan belly and brownish-gray back. Fur seal pups are small with dark brown fur. Later in the breeding season, pups are left alone without parents nearby and may play in large groups on the beach.

Example Photographs

All of this information must be extracted from challenging camera trap imagery. These cameras are placed to survey large populations and thus the animals of interest are small and very hard to differentiate.

Thank you for your interest, and we hope you will help support our project! Please enjoy some cute seal pics:

All photos courtesy of Catherine Foley. Please do not duplicate or share without permission.

Project Backers

- 27Backers

- 100%Funded

- $5,001Total Donations

- $185.22Average Donation