Methods

Summary

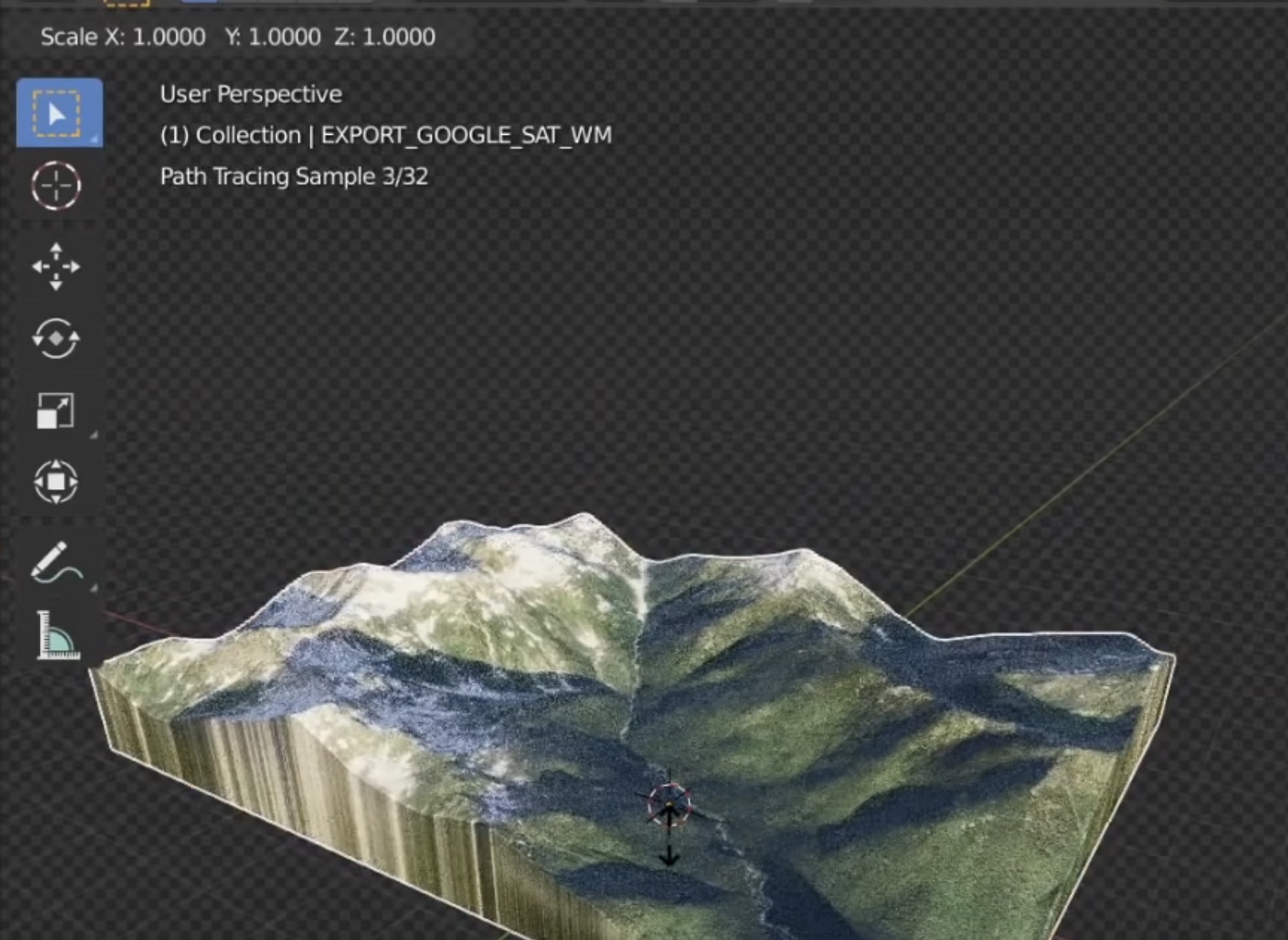

To create our 3D models, we will try two approaches. Using Google Earth, we will take topographical data and create 3D models of the environments included in our narratives. We will apply landscape texture maps based on our historical geography and archive research of the landscapes of San Diego, and place them in Unity or Unreal Engine to turn these into navigable environments. We may also experiment with aerial photogrammetry and LiDAR drone scanning to create our models, once we have completed a first iteration of our proposed project.

Challenges

The idea to create 3D landscape models is not new. What we are proposing that is new is to create models of historical landscapes, make them not only 3D but immersive (360º), and have them occlude present day landscapes in the viewport on location. There are several challenges to this endeavor; 1) mobile phone processor limitations; 2) overlay accuracy, and 3) the viability of using Google Earth models in a 360º context. Regarding point 1, we plan on using single, small, but rich texture map data images to make up for low-poly meshes, an approach that we have had success with in our 3D photogrammetry scans of cultural objects, like ha kwaiyo. Regarding point 2, we are mainly concerned with getting the aesthetics and scanning right at this point, so we are not opposed to providing specific user instructions in the app UI as opposed to writing a script that generates the landscape on the fly. First things first. Regarding point 3, the ubiquitous accessibility of Google Earth nearly outweighs its potential to not be able to deliver the aesthetic vision we believe is possible with 360º drone LiDAR. We may end up rethinking how we present the overlays, if it means that it is exponentially easier to replicate historical overlay experiences at other sites.

Pre Analysis Plan

We will do user testing around both aesthetic preferences and educational effectiveness of the presentations. In the first case, we will provide users with several different experiences, each presenting a similar landscape but in a different format, to gauge user experience issues. In the second case, we will provide intake and exit surveys, before and after our focus group users view the experiences, to gauge their level of excitement, their interest in learning more, their likelihood of sharing the application with a friend or family member, and similar measures of positive engagement.

Protocols

Browse the protocols that are part of the experimental methods.